Podcasts about hadoop

Distributed data processing framework

- 310PODCASTS

- 773EPISODES

- 40mAVG DURATION

- 1MONTHLY NEW EPISODE

- Feb 10, 2026LATEST

POPULARITY

Categories

Best podcasts about hadoop

Latest news about hadoop

- 21 Best System Design Courses for Programming Interviews in 2026 Javarevisited - Feb 17, 2026

- Hands-On Linux Practice: Processes, Services, and Logs DevOps on Medium - Feb 2, 2026

- Spark vs Hadoop: comparativa de herramientas en el ecosistema Big Data Technology on Medium - Jan 30, 2026

- Pinterest's Moka: How Kubernetes Is Rewriting the Rules of Big Data Processing InfoQ - Jan 19, 2026

- 350PB, Millions of Events, One System: Inside Uber’s Cross-Region Data Lake and Disaster Recovery InfoQ - Architecture & Design - Jan 16, 2026

- Top 12 GCP Services for Data Engineers in 2026 — Build Future-Proof Pipelines Google Cloud - Community - Medium - Jan 6, 2026

- Hadoop Operations: A Guide for Developers and Administrators - down 8.09% ($1.44) to $16.37 from $17.81 Top Amazon Price Drops - Dec 10, 2025

- A Founder's Guide to Data Strategy & Analytics Tomasz Tunguz - Oct 9, 2025

Latest podcast episodes about hadoop

How Collate turned 12,000 open source users into an inbound sales engine | Suresh Srinivas

Collate is building a semantic intelligence platform that unifies fragmented metadata tooling across the modern data stack. With 12,000+ community members, 3,000+ open source deployments, and 400+ code contributors, the company has proven that open source can be a systematic GTM engine, not just a distribution tactic. In this episode of BUILDERS, I sat down with Suresh Srinivas, Co-Founder & CEO of Collate, to explore his journey from the Hadoop core team at Yahoo, through founding Hortonworks, to architecting data systems processing 4 trillion events daily at Uber—and why that experience led him to rebuild metadata infrastructure from scratch. Topics Discussed: Why platform builders at Yahoo and Hortonworks struggled to drive business value despite powerful technology The metadata fragmentation problem: how siloed tools lack unified vocabularies and end-to-end context Collate's contrarian decision to build Open Metadata from zero rather than spinning out Uber's internal tooling Engineering an open core GTM model that generates nearly 100% inbound sales from technical practitioners Scaling community contribution: moving from feedback loops to 400+ code contributors Hiring a CMO to translate technical value into business-leader messaging without losing practitioner trust The convergence thesis: structured data, knowledge graphs, and semantic layers as the foundation for reliable AI GTM Lessons For B2B Founders: Architect your open source for GTM leverage, not just distribution: Suresh built Open Metadata as a unified platform consolidating data discovery, observability, and governance—previously fragmented across multiple tools. This architectural decision created natural upgrade paths to Collate's managed offering. The lesson: open source architecture should solve a complete job-to-be-done that reveals commercial value through usage, not just demonstrate technical capability. 100+ daily practitioner conversations beats any user research: Collate maintains ongoing dialogue with their community across Snowflake, Databricks, and other integrations. Suresh called this "a product manager's dream"—immediate feedback on what breaks, what's missing, and what workflow improvements matter. For infrastructure startups, this beat rate of validated learning is nearly impossible to replicate through traditional customer development. High-velocity releases build credibility faster than pedigree: Starting from scratch without Yahoo or Uber's brand meant proving commitment through shipping cadence. Collate's strategy: demonstrate you'll be around and responsive before asking for production deployments. This matters more in open source than closed-source where sales cycles force commitment conversations earlier. Separate technical-buyer and business-buyer GTM motions explicitly: Collate's founding team spoke fluently to data engineers and architects who lived the metadata problem daily. Their CMO hire (after establishing product-market fit) brought expertise in articulating business impact—ROI on data initiatives, compliance risk reduction, AI readiness—without the founders faking business-speak. The timing matters: hire for the motion you're entering, not the one you're in. Play the long game with builder-culture companies: At Uber, internal tools were 2-3 years ahead of vendor solutions but became technical debt as teams moved to new problems. Suresh's advice: "Keep in touch with these larger companies. Your technology will improve and you will have better conversation with larger technical companies." The wedge is timing—catch them when maintenance burden outweighs building pride, typically 24-36 months post-launch. Design for all company scales from day one: Unlike Uber's internal metadata platform built for massive scale with corresponding complexity, Open Metadata works for small teams through enterprises. This wasn't just good design—it was GTM expansion strategy. Building only for scale locks you into enterprise-only sales. Building only for simplicity caps your ACV. The middle path requires architectural discipline upfront. // Sponsors: Front Lines — We help B2B tech companies launch, manage, and grow podcasts that drive demand, awareness, and thought leadership. www.FrontLines.io The Global Talent Co. — We help tech startups find, vet, hire, pay, and retain amazing marketing talent that costs 50-70% less than the US & Europe. www.GlobalTalent.co // Don't Miss: New Podcast Series — How I Hire Senior GTM leaders share the tactical hiring frameworks they use to build winning revenue teams. Hosted by Andy Mowat, who scaled 4 unicorns from $10M to $100M+ ARR and launched Whispered to help executives find their next role. Subscribe here: https://open.spotify.com/show/53yCHlPfLSMFimtv0riPyM

226: Why VCs regret not investing in Acceldata - From IIT to Silicon Valley

Enterprise AI doesn't fail because models are weak. It fails because data pipelines break, permissions drift, costs explode, and governance is treated like an annual checkbox instead of everyday operational discipline.In this episode of Intelligent Indians!, Vikram Vaidyanathan sits down with Rohit Choudhury, Founder & CEO of Acceldata, for a deep conversation on how enterprise data systems actually behave at scale and why AI makes every weak foundation visible.Rohit's founder journey mirrors the last 15 years of data infrastructure: from scaling InMobi in the pre-cloud era, to the Hadoop days at Hortonworks, to pioneering data observability, and now pushing into Agentic Data Management as enterprises move from AI pilots to production.The conversation goes deep into:1. Why AI POCs often work in one team but collapse at enterprise scale 2. The real cost of fixing data late, and why ingestion is the only place to fix it3. How AI agents change the stakes: real-time failures, hallucinations explainability and ai updates4. A practical playbook for CIOs and CDOs: SLAs, rapid experimentation, and leadership upskilling5. Why cost control is becoming existential, from reruns to cloud bills to private/hybrid AIThe “India edge” in building global enterprise companies, and what DPI × AI could unlock nextWatch the full episode to understand what it really takes to make AI work at enterprise scale.Chapters:00:00 Why AI POCs Fail at Scale in Enterprises02:30 Introducing Rohit Choudhury (Acceldata Founder)05:30 Early Days at InMobi & Rewriting the Stack 3 Times08:30 First Startup Failure & Learning Customer Obsession11:30 Birth of the Data Observability Category14:30 How Enterprise Data Pipelines Really Work (1000+ Apps)18:00 Why Enterprises Need End-to-End Data Visibility21:00 COVID Story & Missed Investment Moment24:00 Why hallucinations are dangerous for enterprises27:00 Governance in AI30:00 How Banks Should Prepare for AI at Scale (4 Steps)33:30 Why Leaders Must Learn AI Themselves (Cursor Story)36:30 From Tool Sprawl to One Agentic Platform39:30 Cost Explosion: Cloud vs Private AI42:30 Self-Healing Data & Preventing AI Hallucinations45:00 – Future of Enterprise AI & Closing ThoughtsFollow Z47Website - https://www.z47.com/Instagram - https://www.instagram.com/z47.vc/LinkedIn - https://www.linkedin.com/company/z47-vc/#podcast #podcasts #future #ai #aiindia #aicourses #aiupdates

Security, compliance, and resilience are the cornerstones of trust. In this episode, Lois Houston and Nikita Abraham continue their conversation with David Mills and Tijo Thomas, exploring how Oracle Cloud Infrastructure empowers organizations to protect data, stay compliant, and scale with confidence. Real-world examples from Zoom, KDDI, 8x8, and Uber highlight these capabilities. Cloud Business Jumpstart: https://mylearn.oracle.com/ou/course/cloud-business-jumpstart/152957 Oracle University Learning Community: https://education.oracle.com/ou-community LinkedIn: https://www.linkedin.com/showcase/oracle-university/ X: https://x.com/Oracle_Edu Special thanks to Arijit Ghosh, David Wright, Kris-Ann Nansen, Radhika Banka, and the OU Studio Team for helping us create this episode. ------------------------------------------------------------- Episode Transcript: 00:00 Welcome to the Oracle University Podcast, the first stop on your cloud journey. During this series of informative podcasts, we'll bring you foundational training on the most popular Oracle technologies. Let's get started! 00:26 Lois: Hello and welcome to the Oracle University Podcast! I'm Lois Houston, Director of Communications and Adoption with Customer Success Services, and with me is Nikita Abraham, Team Lead: Editorial Services with Oracle University. Nikita: Hi everyone! In our last episode, we started the conversation around the real business value of Oracle Cloud Infrastructure and how it helps organizations create impact at scale. Lois: Today, we're taking a closer look at what keeps the value strong — things like security, compliance, and the technology that helps businesses stay resilient. To walk us through it, we have our experts from Oracle University, David Mills, Senior Principal PaaS Instructor, and Tijo Thomas, Principal OCI Instructor. 01:12 Nikita: Hi David and Tijo! It's great to have you both here! Tijo, let's start with you. How does Oracle Cloud Infrastructure help organizations stay secure? Tijo: OCI uses a security first approach to protect customer workloads. This is done with implementing a Zero Trust Model. A Zero Trust security model use frequent user authentication and authorization to protect assets while continuously monitoring for potential breaches. This would assume that no users, no devices, no applications are universally trusted. Continuous verification is always required. Access is granted only based on the context of request, the level of trust, and the sensitivity of that asset. There are three strategic pillars that Oracle security first approach is built on. The first one is being automated. With automation, the business doesn't have to rely on any manual work to stay secure. Threat detection, patching, and compliance checks, all these happen automatically. And that reduces human errors and also saving time. Security in OCI is always turned on. Encryption is automatic. Identity checks are continuous. Security is not an afterthought in OCI. It is incorporated into every single layer. Now, while we talk about Oracle's security first approach, remember security is a shared responsibility, and what that means while Oracle handles the data center, the hardware, the infrastructure, software, consumers are responsible for securing their apps, configurations and the data. 03:06 Lois: Tijo, let's discuss this with an example. Imagine an online store called MuShop. They're a fast-growing business selling cat products. Can you walk us through how a business like this can enhance its end-to-end security and compliance with OCI? Tijo: First of all, focusing on securing web servers. These servers host the web portal where customers would browse, they log in, and place their orders. So these web servers are a prime target for attackers. To protect these entry points, MuShop deployed a service called OCI Web Application Firewall. On top of that, the MuShop business have also used OCI security list and network security groups that will control their traffic flow. As when the businesses grow, new users such as developers, operations, finance, staff would all need to be onboarded. OCI identity services is used to assign roles, for example, giving developers access to only the dev instances, and finance would access just the billing dashboards. MuShop also require MFA multi-factor authentication, and that use both password and a time-based authentication code to verify their identities. Talking about some of the critical customer data like emails, addresses, and the payment info, this data is stored in databases and storage. Using OCI Vault, the data is encrypted with customer managed keys. Oracle Data Safe is another service, and that is used to audit who has got access to sensitive tables, and also mask real customer data in non-production environments. 04:59 Nikita: Once those systems are in place, how can MuShop use OCI tools to detect and respond to threats quickly? Tijo: For that, MuShop used a service called OCI Cloud Guard. Think of it like a security operation center, and which is built right into OCI. It monitors the entire OCI environment continuously, and it can track identity activities, storage settings, network configurations and much more. If it finds something risky, like a publicly exposed object storage bucket, or maybe a user having a broad access to that environment, it raises a security finding. And better yet, it can automatically respond. So if someone creates a resource outside of their policy, OCI Cloud Guard can disable it. 05:48 Lois: And what about preventing misconfigurations? How does OCI make that easier while keeping operations secure? Tijo: OCI Security Zone is another service and that is used to enforce security postures in OCI. The goody zones help you to avoid any accidental misconfigurations. For example, in a security zone, you can choose users not to create a storage bucket that is publicly accessible. To stay ahead of vulnerabilities, MuShop runs OCI vulnerability scanning. They have scheduled to scan weekly to capture any outdated libraries or misconfigurations. OCI Security Advisor is another service that is used to flag any unused open ports and with recommending stronger access rules. MuShop needed more than just security. They also had to be compliant. OCI's compliance certifications have helped them to meet data privacy and security regulations across different regions and industries. There are additional services like OCI audit logs for traceability that help them pass internal and external audits. 07:11 Oracle University is proud to announce three brand new courses that will help your teams unlock the power of Redwood—the next generation design system. Redwood enhances the user experience, boosts efficiency, and ensures consistency across Oracle Fusion Cloud Applications. Whether you're a functional lead, configuration consultant, administrator, developer, or IT support analyst, these courses will introduce you to the Redwood philosophy and its business impact. They'll also teach you how to use Visual Builder Studio to personalize and extend your Fusion environment. Get started today by visiting mylearn.oracle.com. 07:52 Nikita: Welcome back! We know that OCI treats security as a continuous design principle: automated, always on, and built right into the platform. David, do you have a real-world example of a company that needed to scale rapidly and was able to do so successfully with OCI? David: In late 2019, Zoom averaged 10 million meeting participants a day. By April 2020, well that number surged to over 300 million as video conferencing became essential for schools, businesses, and families around the world due to the global pandemic. To meet that explosive demand, Zoom chose OCI not just for performance, but for the ability to scale fast. In just nine hours, OCI engineers helped Zoom move from deployment to live production, handling hundreds of thousands of concurrent meetings immediately. Within weeks, they were supporting millions. And Zoom didn't just scale, they sustained it. With OCI's next-gen architecture, Zoom avoided the performance bottlenecks common in legacy clouds. They used OCI functions and cloud native services to scale workloads flexibly and securely. Today, Zoom transfers more than seven petabytes of data per day through Oracle Cloud. That's enough bandwidth to stream HD video continuously for 93 years. And they do it while maintaining high availability, low latency, and enterprise grade security. As articulated by their CEO Erik Yuan, Zoom didn't just meet the moment, they redefined it with OCI behind the scenes. 09:45 Nikita: That's an incredible story about scale and agility. Do you have more examples of companies that turned to OCI to solve complex data or integration challenges? David: Telecom giant KDDI with over 64 million subscribers, faced a growing data dilemma. Data was everywhere. Survey results, system logs, behavioral analytics, but it was scattered across thousands of sources. Different tools for different tasks created silos, delays, and rising costs. KDDI needed a single platform to connect it all, and they chose Oracle. They replaced their legacy data systems with a modern data platform built on OCI and Autonomous Database. Now they can analyze behavior, improve service planning, and make faster, smarter decisions without the data chaos. But KDDI didn't stop there. They built a 300 terabyte data lake and connected all their systems-- custom on-prem apps, SaaS providers like Salesforce, and even multi-cloud infrastructure. Thanks to Oracle Integration and pre-built adapters, everything works together in real-time, even across clouds. AWS, Azure, and OCI now operate in harmony. The results? Reduced operational costs, faster development cycles, governance and API access improved across the board. KDDI can now analyze customer behavior to improve services like where to expand their 5G network. Next up, 8 by 8 powers communication for over 55,000 companies and 160 countries with more than 3 million users, depending on its voice, video, and messaging tools every day. To maintain that scale, they needed a cloud that could deliver low latency global availability and high performance without blowing up costs. Well, they moved their video meeting services from Amazon to OCI and went live in just four days. The results? 25% increase in performance per node, 80% reduction in network egress costs, and a significantly lower overall infrastructure spend. But this wasn't just a lift and shift. 8 by 8 also replaced legacy tools with Oracle Logging Analytics, giving their teams a single view across apps, infrastructure, and regions. 8 by 8 scaled up fast. They migrated core voice services, deployed over 300 microservices using OCI Kubernetes, and now run over 1,700 nodes across 26 global OCI regions. In addition, OCI's Ampere-based virtual machines gave them a major boost, sustaining 80% CPU utilization and more than 30% increased performance per core and with no degradation. And with OCI's Observability and Management platform, they gained real-time visibility into application health across both on-prem and cloud. Bottom line, 8x8 represents yet another excellent example of a company leveraging OCI for maximum business results. 13:24 Lois: Uber handles more than a million trips per hour, and Oracle Cloud Infrastructure is an integral part of making that possible. Can you walk us through how OCI supports Uber's needs? David: Uber, the world's largest on-demand mobility platform, handles over 1 million trips every hour. And behind the scenes, OCI is helping to make that possible. In 2023, Uber began migrating thousands of microservices, data platforms, and AI models to OCI. Why? Because OCI provides the automation, flexibility, and infrastructure scale needed to support Uber's explosive growth. Today, Uber uses OCI Compute to handle massive trips serving traffic and OCI Object Storage to replace one of the largest Hadoop-based data environments in the industry. They needed global reach and multi-cloud compatibility, and OCI delivered. But it's not just scale, it's intelligence. Uber runs dozens of AI models on OCI to support real-time predictions up 14 million per second. From ride pricing to traffic patterns, this AI layer powers every trip behind the scenes. And by shifting stateless workloads to OCI Ampere ARM Compute servers, Uber reduced cost while increasing CPU efficiency. For AI inferencing, Uber uses OCI's AI infrastructure to strike the perfect balance between speed, throughput, and cost. So the next time you use your Uber app to schedule a ride, consider what happens behind the scenes with OCI. 15:18 Lois: That's so impressive! Thank you, David, for those wonderful stories, and Tijo for all of your insights. Whether you're in strategy, finance, or transformation, we hope you're walking away with a clearer view of the business value OCI can bring. Nikita: Yeah, and if you want to learn more about the topics we discussed today, visit mylearn.oracle.com and search for the Cloud Business Jumpstart course. Until next time, this is Nikita Abraham… Lois: And Lois Houston signing off! 15:48 That's all for this episode of the Oracle University Podcast. If you enjoyed listening, please click Subscribe to get all the latest episodes. We'd also love it if you would take a moment to rate and review us on your podcast app. See you again on the next episode of the Oracle University Podcast.

It's all about Data Pipelines. Join Pure Storage Field Solution Architect Chad Hendron and Solutions Director Andrew Silifant for a deep dive into the evolution of data management, focusing on the Data Lakehouse architecture and its role in the age of AI and ML. Our discussion looks at the Data Lakehouse as a powerful combination of a data lake and a data warehouse, solving problems like "data swamps” and proprietary formats of older systems. Viewers will learn about technological advancements, such as object storage and open table formats, that have made this new architecture possible, allowing for greater standardization and multiple tooling functions to access the same data. Our guests also explore current industry trends, including a look at Dremio's 2025 report showing the rapid adoption of Data Lakehouses, particularly as a replacement for older, inefficient systems like cloud data warehouses and traditional data lakes. Gain insight into the drivers behind this migration, including the exponential growth of unstructured data and the need to control cloud expenditure by being more prescriptive about what data is stored in the cloud versus on-premises. Andrew provides a detailed breakdown of processing architectures and the critical importance of meeting SLAs to avoid costly and frustrating pipeline breaks in regulated industries like banking. Finally, we provide practical takeaways and a real-world case study. Chad shares a customer success story about replacing a large, complex Hadoop cluster with a streamlined Dremio and Pure Storage solution, highlighting the massive reduction in physical space, power consumption, and management complexity. Both guests emphasize the need for better governance practices to manage cloud spend and risk. Andrew underscores the essential, full-circle role of databases—from the "alpha" of data creation to the "omega" of feature stores and vector databases for modern AI use cases like Retrieval-Augmented Generation (RAG). Tune in to understand how a holistic data strategy, including Pure's Enterprise Data Cloud, can simplify infrastructure and future-proof your organization for the next wave of data-intensive workloads. To learn more, visit https://www.purestorage.com/solutions/ai/data-warehouse-streaming-analytics.html Check out the new Pure Storage digital customer community to join the conversation with peers and Pure experts: https://purecommunity.purestorage.com/ 00:00 Intro and Welcome 03:15 Data Lakehouse Primer 08:31 Stat of the Episode on Lakehouse Usage 10:50 Challenges with Data Pipeline access 13:58 Assessing Organization Success with Data Cleaning 16:07 Use Cases for the Data Lakehouse 20:41 Case Study on Data Lakehouse Use Case 24:11 Hot Takes Segment

Discover how Anton, one of the most experienced AI and machine learning experts in the industry, discusses his evolution from building matching algorithms at Bright.com to navigating today's AI landscape. With over a decade of experience in large-scale recommendation systems and data processing, Anton reveals how the fundamentals of big data and embarrassingly parallel computing shaped modern AI applications, and why human-centered design is now more critical than ever in building AI-powered products.Episode Timestamps:- 00:00 - Introduction and welcome- 00:47 - Anton's experience at Bright.com and the recruitment matching problem- 03:23 - The big data era, Hadoop, and Spark in the web 2.0 world- 05:00 - Embarrassingly parallel computing and large-scale data processing- 10:00 - How recommendation algorithms work at scale in recruitment- 20:00 - Evolution of the data scientist role over time- 30:00 - Building intelligent matching systems- 40:00 - Understanding user needs and organizational workflows- 50:00 - The shift toward human-centered AI design- 57:30 - Providing pre-filled content and personalization- 58:02 - Anton's vision for the future and human values- 01:01:46 - Closing thoughts on humanity and technologyAbout Anton:Anton is a veteran machine learning and AI engineer with over a decade of experience building large-scale recommendation and matching systems. He played a key role at Bright.com, a two-sided recruitment platform that pioneered transparent scoring algorithms for candidate-job matching. His expertise spans big data infrastructure, recommendation systems, user experience optimization, and the human-centered approach to AI product development.Resources Mentioned:- Hadoop and Spark (big data processing frameworks)- Big data infrastructure and cloud computing platforms- Two-sided marketplace architectures- Recommendation algorithms and matching systems- User workflow analysis toolsPartner Links:- Book Enterprise Training — https://www.upscaile.com/- Subscribe to our free newsletter — https://www.theaireport.ai/subscribe-theaireport-youtubeHashtags:#AIAgents #MachineLearningSystems #RecruitmentTechnology #BigData #DataScience #RecommendationAlgorithms #HadoopSpark #AIProductDesign #HumanCenteredAI #LargeScaleML #CloudComputing #AIStrategy #FutureOfWork #TechLeadership #CareerDevelopment

Building Real-time Systems for Apple, Nike & more ft. Adi Polak | Ep. 1

The Confluent Developer Podcast is here! For this first episode, Tim Berglund talks to his co-host, Adi Polak (Confluent), about her career in distributed data systems. Her first job: neighborhood dogwalker. Her challenge/theme: early Hadoop, working at Akamai on data optimization and real-time threat detection for huge global customers like Apple, Nike, Facebook and others, and the power of collaboration. SEASON 2 Hosted by Tim Berglund, Adi Polak and Viktor Gamov Produced and Edited by Noelle Gallagher, Peter Furia and Nurie Mohamed Music by Coastal Kites Artwork by Phil Vo

An airhacks.fm conversation with Adam Dudczak (@maneo) about: early programming experiences with Commodore 64 and Pascal, demo scene participation through postal mail swapping of floppy disks, writing assembly code for 64K intros with music and graphics, developing digital library systems using Java Servlets and Hibernate, involvement in reactivating Poznan Java User Group in 2007, NetBeans Dream Team and NetBeans World Tour, appearing on Polish breakfast TV to discuss Java programming, working at Supercomputing Center on cultural heritage digitization projects, transitioning to EJB 3.0 and Glassfish based on conference inspirations, joining allegro in 2014 to rewrite search functionality from PHP to Java microservices, handling 14K requests per second with Solr-based search infrastructure, migrating big data stack from on-premise Hadoop to Google Cloud Platform, developing private banking application for children using Spring and Hibernate then migrating to Google Sheets with 70 lines of JavaScript, discussing public cloud cost optimization strategies, comparing AWS Lambda versus EC2 versus container services based on traffic patterns, emphasizing removal of code when moving to public cloud to leverage managed services, standardization benefits of Java EE for long-term maintenance and migration, quarkus as modern framework supporting old Jakarta EE code with fast startup times, importance of choosing appropriate persistence layer (S3 vs relational databases) based on cloud costs, serverless architectures for enterprise applications with predictable low traffic, differences between AWS Azure and GCP service offerings and pricing models, Turbo assembler project klatwa Adam Dudczak on twitter: @maneo

#23 - On devait parler de Gladia... on a fini par parler de presque tout avec Jean-Louis Quéguiner

Bienvenue dans "IA Pas que la Data" ! Dans cet épisode un peu particulier, on plonge au cœur de la reconnaissance vocale avec Jean-Louis Quéguiner, CEO de Gladia, et on dérive... beaucoup. On y découvre Gladia, une plateforme speech to text, avec une spécialité : le multilinguisme et la tolérance aux accents du monde entier.On explorera les cas d'usages concrets pour l'IA, de la médecine à la relation client, en passant par les systèmes bancaires.Mais l'épisode prend vite de l'ampleur : on parle de souveraineté des données, des enjeux de l'IA, de la géopolitique, et on se demande même si OpenAI va survivre. Bref, préparez-vous à une conversation passionnante, pleine d'humour et de rebondissements, avec un expert qui n'a pas peur de sortir des sentiers battus.(00:00) - Introduction et présentation de Jean-Louis Queguiner (01:42) - Big Data, Hadoop et les premiers pas dans l'IA (03:49) - OVH : Challenges et opportunités Big Data (06:43) - Les leçons des startups et l'approche MVP (09:07) - La genèse de Gladia : De l'Idée à la réalité (11:22) - Gladia et l'Infrastructure OVH : Indépendance et synergies (15:04) - Le multilinguisme : Un atout stratégique pour l'IA en Europe (17:13) - Souveraineté numérique : Cloud Act, Cloud et Data (28:35) - Reconnaissance vocale : Technologies et défis (31:27) - Cas d'usage avancés : médecine, support et localisation (42:26) - Accessibilité et IA : L'impact de la voix (50:04) - Confidentialité, modèles économiques et protection des données (52:08) - OpenAI : Modèles, défis financiers et l'avenir Cet épisode vous a plus, alors, nous comptons sur vous pour mettre 5 étoiles et le partager autour de vous !

#194 Was wurde aus MapReduce und der funktionalen Eleganz in verteilten Systemen?

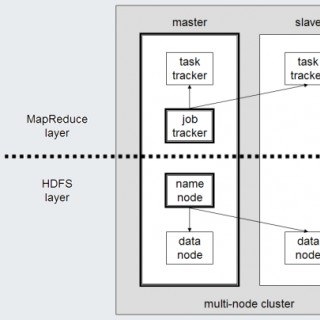

MapReduce: Ein Deep DiveIm Jahr 2004 war die Verarbeitung von großen Datenmengen eine richtige Herausforderung. Einige Firmen hatten dafür sogenannte Supercomputer. Andere haben nur mit der Schulter gezuckt und auf das Ende ihrer Berechnung gewartet. Google war einer der Player, der zwar große Datenmengen hatte und diese auch verarbeiten wollte, jedoch keine Supercomputer zur Verfügung hatte. Oder besser gesagt: Nicht das Geld in die Hand nehmen wollte.Was macht man also, wenn man ein Problem hat? Eine Lösung suchen. Das hat Jeffrey Dean und sein Team getan. Das Ergebnis? Ein revolutionäres Paper, wie man mittels MapReduce große Datenmengen verteilt auf einfacher Commodity-Hardware verarbeiten kann.In dieser Podcast-Episode schauen wir uns das mal genauer an. Wir klären, was MapReduce ist, wie es funktioniert, warum MapReduce so revolutionär war, wie es mit Hardware-Ausfällen umgegangen ist, welche Herausforderungen in der Praxis hatte bzw. immer noch hat, was das Google File System, Hadoop und HDFS damit zu tun haben und ordnen MapReduce im Kontext der heutigen Technologien mit Cloud und Co ein.Eine weitere Episode “Papers We Love”.Bonus: Hadoop ist wohl der Elefant im Raum.Unsere aktuellen Werbepartner findest du auf https://engineeringkiosk.dev/partnersDas schnelle Feedback zur Episode:

E550 Shayde Christian, Chief Data & Analytics Officer at Cloudera

Today's guest is Shayde Christian, Chief Data & Analytics Officer at Cloudera. Founded in 2008, Cloudera believes that data can make what is impossible today, possible tomorrow. They empower people to transform complex data into clear and actionable insights. Cloudera delivers an enterprise data cloud for any data, anywhere, from the Edge to AI. Powered by the relentless innovation of the open source community, Cloudera advances digital transformation for the world's largest enterprises.Shayde leads all data and analytics functions at Cloudera, overseeing data science, machine learning, business intelligence, data architecture, data engineering, platform engineering and DevOps. He helps customers navigate their hybrid cloud migration and optimization journeys. Shayde has built or revitalized six enterprise data analytics organizations for Fortune 500 companies, global tech firms and startups.In this episode, Shayde talks about:His unique career journey, following passions and pursuing a true calling,How Cloudera evolved beyond Hadoop, pioneering AI-driven data solutions,Driving success through secure AI, data quality and feedback,Scalability, ROI, killer apps and AI-driven automation,Why attracting AI talent is hard; teams bootstrap skills internally,Why Cloudera is a great place to work with a focus on innovation and purpose,How his team aims to bridge the tech-business gap using AI,Leading the software evolution, investing in generative AI for success

Le BigDataHebdo reçoit Florian Caringi, responsable des plateformes Data & IA au sein du groupe BPCE. On discute de l'évolution des architectures Big Data, de Hadoop aux environnements hybrides et cloud, avec une adoption massive de Google Cloud (BigQuery, Vertex AI) pour des usages analytiques et data science.Florian partage son expérience sur les défis de migration, de FinOps, et l'intégration des IA génératives. Une discussion passionnante sur la modernisation des infrastructures et l'impact des nouvelles technologies dans les grandes organisations.Show notes et chapitres sur http://bigdatahebdo.com/podcast/episode-212-cloud-hybride-bpce/

Do You Really Need That New Data Tool, or is a Spreadsheet Good Enough?

This morning, a great article came across my feed that gave me PTSD, asking if Iceberg is the Hadoop of the Modern Data Stack? In this rant, I bring the discussion back to a central question you should ask with any hot technology - do you need it at all? Do you need a tool built for the top 1% of companies at a sufficient data scale? Or is a spreadsheet good enough? Link: https://blog.det.life/apache-iceberg-the-hadoop-of-the-modern-data-stack-c83f63a4ebb9

Tackling AI, Cloud Costs, and Legacy Systems with Miles Ward

Corey Quinn chats with Miles Ward, CTO of SADA, about SADA's recent acquisition by Insight and its impact on scaling the company's cloud services. Ward explains how Insight's backing allows SADA to take on more complex projects, such as multi-cloud migrations and data center transitions. They also discuss AI's growing role in business, the challenges of optimizing cloud AI costs, and the differences between cloud-to-cloud and data center migrations. Corey and Miles also share their takes on domain registrars and Corey gives a glimpse into his Raspberry Pi Kubernetes setup.Show Highlights(00:00) Intro(00:48) Backblaze sponsor read(2:04) Google's support of SADA being acquired by Insight(2:44) How the skills SADA invested in affects the cases they accept (5:14) Why it's easier to migrate from one cloud to another than from data center to cloud(7:06) Customer impact from the Broadcom pricing changes(10:40) The current cost of AI(13:55) Why the scale of AI makes it difficult to understand its current business impact(15:43) The challenges of monetizing AI(17:31) Micro and macro scale perspectives of AI(21:16) Amazon's new habit of slowly killing of services(26:55) Corey's policy to never use a domain registrar with the word “daddy” in their name(32:46) Where to find more from Miles and SADAAbout Miles WardAs Chief Technology Officer at SADA, Miles Ward leads SADA's cloud strategy and solutions capabilities. His remit includes delivering next-generation solutions to challenges in big data and analytics, application migration, infrastructure automation, and cost optimization; reinforcing our engineering culture; and engaging with customers on their most complex and ambitious plans around Google Cloud.Previously, Miles served as Director and Global Lead for Solutions at Google Cloud. He founded the Google Cloud's Solutions Architecture practice, launched hundreds of solutions, built Style-Detection and Hummus AI APIs, built CloudHero, designed the pricing and TCO calculators, and helped thousands of customers like Twitter who migrated the world's largest Hadoop cluster to public cloud and Audi USA who re-platformed to k8s before it was out of alpha, and helped Banco Itau design the intercloud architecture for the bank of the future.Before Google, Miles helped build the AWS Solutions Architecture team. He wrote the first AWS Well-Architected framework, proposed Trusted Advisor and the Snowmobile, invented GameDay, worked as a core part of the Obama for America 2012 “tech” team, helped NASA stream the Curiosity Mars Rover landing, and rebooted Skype in a pinch.Earning his Bachelor of Science in Rhetoric and Media Studies from Willamette University, Miles is a three-time technology startup entrepreneur who also plays a mean electric sousaphone.LinksProfessional site: https://sada.com/ LinkedIn: https://www.linkedin.com/in/milesward/ Twitter: https://twitter.com/mileswardSponsorBackblaze: https://www.backblaze.com/

In this Screaming in the Cloud Replay, we're revisiting our conversation with Miles War — perhaps the closest thing Google Cloud has to Corey Quinn. With a wit and sharpness at hand, and an entire backup retinue of trumpets, trombones, and various brass horns, Miles is here to join the conversation about what all is going on at Google Cloud. Miles breaks down SADA and their partnership with Google Cloud. He goes into some details on what GCP has been up to, and talks about the various areas they are capitulating forward. Miles talks about working with Thomas Kurian, who is the only who counts since he follows Corey on Twitter, and the various profundities that GCP has at hand.Show Highlights:(0:00) Intro(1:38) Sonrai Security sponsor read(2:40) Reliving Google Cloud Next 2021(7:24) Unlikable, yet necessary change at Google(11:41) Lack of Focus in the Cloud(18:03) Google releases benefitting developers(20:57) The rise of distributed databases(24:12) Backblaze sponsor read(24:41) Arguments for (and against) going multi-cloud(26:49) The problem with Google Cloud outages(33:01) Data transfer fees(37:49) Where you can find more from MilesAbout Miles WardAs Chief Technology Officer at SADA, Miles Ward leads SADA's cloud strategy and solutions capabilities. His remit includes delivering next-generation solutions to challenges in big data and analytics, application migration, infrastructure automation, and cost optimization; reinforcing our engineering culture; and engaging with customers on their most complex and ambitious plans around Google Cloud.Previously, Miles served as Director and Global Lead for Solutions at Google Cloud. He founded the Google Cloud's Solutions Architecture practice, launched hundreds of solutions, built Style-Detection and Hummus AI APIs, built CloudHero, designed the pricing and TCO calculators, and helped thousands of customers like Twitter who migrated the world's largest Hadoop cluster to public cloud and Audi USA who re-platformed to k8s before it was out of alpha, and helped Banco Itau design the intercloud architecture for the bank of the future.Before Google, Miles helped build the AWS Solutions Architecture team. He wrote the first AWS Well-Architected framework, proposed Trusted Advisor and the Snowmobile, invented GameDay, worked as a core part of the Obama for America 2012 “tech” team, helped NASA stream the Curiosity Mars Rover landing, and rebooted Skype in a pinch.Earning his Bachelor of Science in Rhetoric and Media Studies from Willamette University, Miles is a three-time technology startup entrepreneur who also plays a mean electric sousaphone.Links:SADA.com: https://sada.comTwitter: https://twitter.com/mileswardEmail: miles@sada.comOriginal episode:https://www.lastweekinaws.com/podcast/screaming-in-the-cloud/gcp-s-many-profundities-with-miles-ward/SponsorsSonrai Security: sonrai.co/access24Backblaze: backblaze.com

DataOps, Observability, and The Cure for Data Team Blues - Christopher Bergh

0:00 hi everyone Welcome to our event this event is brought to you by data dos club which is a community of people who love 0:06 data and we have weekly events and today one is one of such events and I guess we 0:12 are also a community of people who like to wake up early if you're from the states right Christopher or maybe not so 0:19 much because this is the time we usually have uh uh our events uh for our guests 0:27 and presenters from the states we usually do it in the evening of Berlin time but yes unfortunately it kind of 0:34 slipped my mind but anyways we have a lot of events you can check them in the 0:41 description like there's a link um I don't think there are a lot of them right now on that link but we will be 0:48 adding more and more I think we have like five or six uh interviews scheduled so um keep an eye on that do not forget 0:56 to subscribe to our YouTube channel this way you will get notified about all our future streams that will be as awesome 1:02 as the one today and of course very important do not forget to join our community where you can hang out with 1:09 other data enthusiasts during today's interview you can ask any question there's a pin Link in live chat so click 1:18 on that link ask your question and we will be covering these questions during the interview now I will stop sharing my 1:27 screen and uh there is there's a a message in uh and Christopher is from 1:34 you so we actually have this on YouTube but so they have not seen what you wrote 1:39 but there is a message from to anyone who's watching this right now from Christopher saying hello everyone can I 1:46 call you Chris or you okay I should go I should uh I should look on YouTube then okay yeah but anyways I'll you don't 1:53 need like you we'll need to focus on answering questions and I'll keep an eye 1:58 I'll be keeping an eye on all the question questions so um 2:04 yeah if you're ready we can start I'm ready yeah and you prefer Christopher 2:10 not Chris right Chris is fine Chris is fine it's a bit shorter um 2:18 okay so this week we'll talk about data Ops again maybe it's a tradition that we talk about data Ops every like once per 2:25 year but we actually skipped one year so because we did not have we haven't had 2:31 Chris for some time so today we have a very special guest Christopher Christopher is the co-founder CEO and 2:37 head chef or hat cook at data kitchen with 25 years of experience maybe this 2:43 is outdated uh cuz probably now you have more and maybe you stopped counting I 2:48 don't know but like with tons of years of experience in analytics and software engineering Christopher is known as the 2:55 co-author of the data Ops cookbook and data Ops Manifesto and it's not the 3:00 first time we have Christopher here on the podcast we interviewed him two years ago also about data Ops and this one 3:07 will be about data hops so we'll catch up and see what actually changed in in 3:13 these two years and yeah so welcome to the interview well thank you for having 3:19 me I'm I'm happy to be here and talking all things related to data Ops and why 3:24 why why bother with data Ops and happy to talk about the company or or what's changed 3:30 excited yeah so let's dive in so the questions for today's interview are prepared by Johanna berer as always 3:37 thanks Johanna for your help so before we start with our main topic for today 3:42 data Ops uh let's start with your ground can you tell us about your career Journey so far and also for those who 3:50 have not heard have not listened to the previous podcast maybe you can um talk 3:55 about yourself and also for those who did listen to the previous you can also maybe give a summary of what has changed 4:03 in the last two years so we'll do yeah so um my name is Chris so I guess I'm 4:09 a sort of an engineer so I spent about the first 15 years of my career in 4:15 software sort of working and building some AI systems some non- AI systems uh 4:21 at uh Us's NASA and MIT linol lab and then some startups and then um 4:30 Microsoft and then about 2005 I got I got the data bug uh I think you know my 4:35 kids were small and I thought oh this data thing was easy and I'd be able to go home uh for dinner at 5 and life 4:41 would be fine um because I was a big you started your own company right and uh it didn't work out that way 4:50 and um and what was interesting is is for me it the problem wasn't doing the 4:57 data like I we had smart people who did data science and data engineering the act of creating things it was like the 5:04 systems around the data that were hard um things it was really hard to not have 5:11 errors in production and I would sort of driving to work and I had a Blackberry at the time and I would not look at my 5:18 Blackberry all all morning I had this long drive to work and I'd sit in the parking lot and take a deep breath and 5:24 look at my Blackberry and go uh oh is there going to be any problems today and I'd be and if there wasn't I'd walk and 5:30 very happy um and if there was I'd have to like rce myself um and you know and 5:36 then the second problem is the team I worked for we just couldn't go fast enough the customers were super 5:42 demanding they didn't care they all they always thought things should be faster and we are always behind and so um how 5:50 do you you know how do you live in that world where things are breaking left and right you're terrified of making errors 5:57 um and then second you just can't go fast enough um and it's preh Hadoop era 6:02 right it's like before all this big data Tech yeah before this was we were using 6:08 uh SQL Server um and we actually you know we had smart people so we we we 6:14 built an engine in SQL Server that made SQL Server a column or 6:20 database so we built a column or database inside of SQL Server um so uh 6:26 in order to make certain things fast and and uh yeah it was it was really uh it's not 6:33 bad I mean the principles are the same right before Hadoop it's it's still a database there's still indexes there's 6:38 still queries um things like that we we uh at the time uh you would use olap 6:43 engines we didn't use those but you those reports you know are for models it's it's not that different um you know 6:50 we had a rack of servers instead of the cloud um so yeah and I think so what what I 6:57 took from that was uh it's just hard to run a team of people to do do data and analytics and it's not 7:05 really I I took it from a manager perspective I started to read Deming and 7:11 think about the work that we do as a factory you know and in a factory that produces insight and not automobiles um 7:18 and so how do you run that factory so it produces things that are good of good 7:24 quality and then second since I had come from software I've been very influenced 7:29 by by the devops movement how you automate deployment how you run in an agile way how you 7:35 produce um how you how you change things quickly and how you innovate and so 7:41 those two things of like running you know running a really good solid production line that has very low errors 7:47 um and then second changing that production line at at very very often they're kind of opposite right um and so 7:55 how do you how do you as a manager how do you technically approach that and 8:00 then um 10 years ago when we started data kitchen um we've always been a profitable company and so we started off 8:07 uh with some customers we started building some software and realized that we couldn't work any other way and that 8:13 the way we work wasn't understood by a lot of people so we had to write a book and a Manifesto to kind of share our our 8:21 methods and then so yeah we've been in so we've been in business now about a little over 10 8:28 years oh that's cool and uh like what 8:33 uh so let's talk about dat offs and you mentioned devops and how you were inspired by that and by the way like do 8:41 you remember roughly when devops as I think started to appear like when did people start calling these principles 8:49 and like tools around them as de yeah so agile Manifesto well first of all the I 8:57 mean I had a boss in 1990 at Nasa who had this idea build a 9:03 little test a little learn a lot right that was his Mantra and then which made 9:09 made a lot of sense um and so and then the sort of agile software Manifesto 9:14 came out which is very similar in 2001 and then um the sort of first real 9:22 devops was a guy at Twitter started to do automat automated deployment you know 9:27 push a button and that was like 200 Nish and so the first I think devops 9:33 Meetup was around then so it's it's it's been 15 years I guess 6 like I was 9:39 trying to so I started my career in 2010 so I my first job was a Java 9:44 developer and like I remember for some things like we would just uh SFTP to the 9:52 machine and then put the jar archive there and then like keep our fingers crossed that it doesn't break uh uh like 10:00 it was not really the I wouldn't call it this way right you were deploying you 10:06 had a Dey process I put it yeah 10:11 right was that so that was documented too it was like put the jar on production cross your 10:17 fingers I think there was uh like a page on uh some internal Viki uh yeah that 10:25 describes like with passwords and don't like what you should do yeah that was and and I think what's interesting is 10:33 why that changed right and and we laugh at it now but that was why didn't you 10:38 invest in automating deployment or a whole bunch of automated regression 10:44 tests right that would run because I think in software now that would be rare 10:49 that people wouldn't use C CD they wouldn't have some automated tests you know functional 10:56 regression tests that would be the exception whereas that the norm at the beginning of your career and so that's 11:03 what's interesting and I think you know if we if we talk about what's changed in the last two three years I I think it is 11:10 getting more standard there are um there's a lot more companies who are 11:15 talking data Ops or data observability um there's a lot more tools that are a lot more people are 11:22 using get in data and analytics than ever before I think thanks to DBT um and 11:29 there's a lot of tools that are I think getting more code Centric right that 11:35 they're not treating their configuration like a black box there there's several 11:41 bi tools that tout the fact that they that they're uh you know they're they're git Centric you know and and so and that 11:49 they're testable and that they have apis so things like that I think people maybe let's take a step back and just do a 11:57 quick summary of what data Ops data Ops is and then we can talk about like what changed in the last two years sure so I 12:06 guess it starts with a problem and that it's it sort of 12:11 admits some dark things about data and analytics and that we're not really successful and we're not really happy um 12:19 and if you look at the statistics on sort of projects and problems and even 12:25 the psychology like I think about a year or two we did a survey of 12:31 data Engineers 700 data engineers and 78% of them wanted their job to come with a therapist and 50% were thinking 12:38 of leaving the career altogether and so why why is everyone sort of unhappy well I I I think what happens is 12:46 teams either fall into two buckets they're sort of heroic teams who 12:52 are doing their they're working night and day they're trying really hard for their customer um and then they get 13:01 burnt out and then they quit honestly and then the second team have wrapped 13:06 their projects up in so much process and proceduralism and steps that doing 13:12 anything is sort of so slow and boring that they again leave in frustration um 13:18 or or live in cynicism and and that like the only outcome is quit and 13:24 start uh woodworking yeah the only outcome really is quit and start working 13:29 and um as a as a manager I always hated that right because when when your team 13:35 is either full of heroes or proceduralism you always have people who have the whole system in their head 13:42 they're certainly key people and then when they leave they take all that knowledge with them and then that 13:48 creates a bottleneck and so both of which are aren aren't and I think the 13:53 main idea of data Ops is there's a balance between fear and herois 14:00 that you can live you don't you know you don't have to be fearful 95% of the time maybe one or two% it's good to be 14:06 fearful and you don't have to be a hero again maybe one or two per it's good to be a hero but there's a balance um and 14:13 and in that balance you actually are much more prod

A veteran of early Twitter's fail whale wars, Dmitriy joins the show to chat about the time when 70% of the Hadoop cluster got accidentally deleted, the financial reality of writing a book, and how to navigate acquisitions. Segments: (00:00:00) The Infamous Hadoop Outage (00:02:36) War Stories from Twitter's Early Days (00:04:47) The Fail Whale Era (00:06:48) The Hadoop Cluster Shutdown (00:12:20) “First Restore the Service Then Fix the Problem. Not the Other Way Around.” (00:14:10) War Rooms and Organic Decision-Making (00:16:16) The Importance of Communication in Incident Management (00:19:07) That Time When the Data Center Caught Fire (00:21:45) The "Best Email Ever" at Twitter (00:25:34) The Importance of Failing (00:27:17) Distributed Systems and Error Handling (00:29:49) The Missing README (00:33:13) Agile and Scrum (00:38:44) The Financial Reality of Writing a Book (00:43:23) Collaborative Writing Is Like Open-Source Coding (00:44:41) Finding a Publisher and the Role of Editors (00:50:33) Defining the Tone and Voice of the Book (00:54:23) Acquisitions from an Engineer's Perspective (00:56:00) Integrating Acquired Teams (01:02:47) Technical Due Diligence (01:04:31) The Reality of System Implementation (01:06:11) Integration Challenges and Gotchas Show Notes: - Dmitriy Ryaboy on Twitter: https://x.com/squarecog - The Missing README: https://www.amazon.com/Missing-README-Guide-Software-Engineer/dp/1718501838 - Chris Riccomini on how to write a technical book: https://cnr.sh/essays/how-to-write-a-technical-book Stay in touch: - Make Ronak's day by signing up for our newsletter to get our favorites parts of the convo straight to your inbox every week :D https://softwaremisadventures.com/ Music: Vlad Gluschenko — Forest License: Creative Commons Attribution 3.0 Unported: https://creativecommons.org/licenses/by/3.0/deed.en

Software at Scale 60 - Data Platforms with Aravind Suresh

Aravind was a Staff Software Engineer at Uber, and currently works at OpenAI.Apple Podcasts | Spotify | Google PodcastsEdited TranscriptCan you tell us about the scale of data Uber was dealing with when you joined in 2018, and how it evolved?When I joined Uber in mid-2018, we were handling a few petabytes of data. The company was going through a significant scaling journey, both in terms of launching in new cities and the corresponding increase in data volume. By the time I left, our data had grown to over an exabyte. To put it in perspective, the amount of data grew by a factor of about 20 in just a three to four-year period.Currently, Uber ingests roughly a petabyte of data daily. This includes some replication, but it's still an enormous amount. About 60-70% of this is raw data, coming directly from online systems or message buses. The rest is derived data sets and model data sets built on top of the raw data.That's an incredible amount of data. What kinds of insights and decisions does this enable for Uber?This scale of data enables a wide range of complex analytics and data-driven decisions. For instance, we can analyze how many concurrent trips we're handling throughout the year globally. This is crucial for determining how many workers and CPUs we need running at any given time to serve trips worldwide.We can also identify trends like the fastest growing cities or seasonal patterns in traffic. The vast amount of historical data allows us to make more accurate predictions and spot long-term trends that might not be visible in shorter time frames.Another key use is identifying anomalous user patterns. For example, we can detect potentially fraudulent activities like a single user account logging in from multiple locations across the globe. We can also analyze user behavior patterns, such as which cities have higher rates of trip cancellations compared to completed trips.These insights don't just inform day-to-day operations; they can lead to key product decisions. For instance, by plotting heat maps of trip coordinates over a year, we could see overlapping patterns that eventually led to the concept of Uber Pool.How does Uber manage real-time versus batch data processing, and what are the trade-offs?We use both offline (batch) and online (real-time) data processing systems, each optimized for different use cases. For real-time analytics, we use tools like Apache Pinot. These systems are optimized for low latency and quick response times, which is crucial for certain applications.For example, our restaurant manager system uses Pinot to provide near-real-time insights. Data flows from the serving stack to Kafka, then to Pinot, where it can be queried quickly. This allows for rapid decision-making based on very recent data.On the other hand, our offline flow uses the Hadoop stack for batch processing. This is where we store and process the bulk of our historical data. It's optimized for throughput – processing large amounts of data over time.The trade-off is that real-time systems are generally 10 to 100 times more expensive than batch systems. They require careful tuning of indexes and partitioning to work efficiently. However, they enable us to answer queries in milliseconds or seconds, whereas batch jobs might take minutes or hours.The choice between batch and real-time depends on the specific use case. We always ask ourselves: Does this really need to be real-time, or can it be done in batch? The answer to this question goes a long way in deciding which approach to use and in building maintainable systems.What challenges come with maintaining such large-scale data systems, especially as they mature?As data systems mature, we face a range of challenges beyond just handling the growing volume of data. One major challenge is the need for additional tools and systems to manage the complexity.For instance, we needed to build tools for data discovery. When you have thousands of tables and hundreds of users, you need a way for people to find the right data for their needs. We built a tool called Data Book at Uber to solve this problem.Governance and compliance are also huge challenges. When you're dealing with sensitive customer data, you need robust systems to enforce data retention policies and handle data deletion requests. This is particularly challenging in a distributed system where data might be replicated across multiple tables and derived data sets.We built an in-house lineage system to track which workloads derive from what data. This is crucial for tasks like deleting specific data across the entire system. It's not just about deleting from one table – you need to track down and update all derived data sets as well.Data deletion itself is a complex process. Because most files in the batch world are kept immutable for efficiency, deleting data often means rewriting entire files. We have to batch these operations and perform them carefully to maintain system performance.Cost optimization is an ongoing challenge. We're constantly looking for ways to make our systems more efficient, whether that's by optimizing our storage formats, improving our query performance, or finding better ways to manage our compute resources.How do you see the future of data infrastructure evolving, especially with recent AI advancements?The rise of AI and particularly generative AI is opening up new dimensions in data infrastructure. One area we're seeing a lot of activity in is vector databases and semantic search capabilities. Traditional keyword-based search is being supplemented or replaced by embedding-based semantic search, which requires new types of databases and indexing strategies.We're also seeing increased demand for real-time processing. As AI models become more integrated into production systems, there's a need to handle more GPUs in the serving flow, which presents its own set of challenges.Another interesting trend is the convergence of traditional data analytics with AI workloads. We're starting to see use cases where people want to perform complex queries that involve both structured data analytics and AI model inference.Overall, I think we're moving towards more integrated, real-time, and AI-aware data infrastructure. The challenge will be balancing the need for advanced capabilities with concerns around cost, efficiency, and maintainability. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.softwareatscale.dev

Apache Iceberg as the new S3 and Data Analytics with Starburst | Episode #86

Justin Borgman, Co-Founder and CEO of Starburst, explores the cutting-edge world of data management and analytics. Justin shares insights into Starburst's innovative use of Trino and Apache Iceberg, revolutionizing data warehousing and analytics. Learn about the company's journey, the evolution of data lakes, and the role of data science in modern enterprises. Episode Overview: In Episode 86 of Great Things with Great Tech, Anthony Spiteri chats with Justin Borgman, Co-Founder and CEO of Starburst. This episode dives into the transformative world of data management and analytics, exploring how Starburst leverages cutting-edge technologies like Trino and Apache Iceberg to revolutionize data warehousing. Justin shares his journey from founding Hadapt to leading Starburst, the evolution of data lakes, and the critical role of data science in today's tech landscape. Key Topics Discussed: Starburst's Origins and Vision: Justin discusses the founding of Starburst and the vision to democratize data access and eliminate data silos. Trino and Iceberg: The importance of Trino as a SQL query engine and Iceberg as an open table format in modern data management. Data Democratization: How Starburst enables organizations to perform high-performance analytics on data stored anywhere, avoiding vendor lock-in. Data Science Evolution: Insights into what it takes to become a data scientist today, emphasizing continuous learning and adaptability. Future of Data Management: The shift towards data and AI operating systems, and Starburst's role in shaping this future. Technology and Technology Partners Mentioned: Starburst, Trino, Apache Iceberg, Teradata, Hadoop, SQL, S3, Azure, Google Cloud Storage, Kafka, Dell, Data Lakehouse, AI, Machine Learning, Big Data, Data Governance, Data Ingestion, Data Management, Capacity Management, Data Security, Compliance, Open Source ☑️ Web: https://www.starburst.io ☑️ Support the Channel: https://ko-fi.com/gtwgt ☑️ Be on #GTwGT: Contact via Twitter @GTwGTPodcast or visit https://www.gtwgt.com ☑️ Subscribe to YouTube: https://www.youtube.com/@GTwGTPodcast?sub_confirmation=1 Check out the full episode on our platforms: YouTube: https://youtu.be/kmB_pjGb5Js Spotify: https://open.spotify.com/episode/2l9aZpvwhWcdmL0lErpUHC?si=x3YOQw_4Sp-vtdjyroMk3Q Apple Podcasts: https://podcasts.apple.com/us/podcast/darknet-diaries-with-jack-rhysider-episode-83/id1519439787?i=1000654665731 Follow Us: Website: https://gtwgt.com Twitter: https://twitter.com/GTwGTPodcast Instagram: https://instagram.com/GTwGTPodcast ☑️ Music: https://www.bensound.com

Stitching Together Enterprise Analytics With Microsoft Fabric

Summary Data lakehouse architectures have been gaining significant adoption. To accelerate adoption in the enterprise Microsoft has created the Fabric platform, based on their OneLake architecture. In this episode Dipti Borkar shares her experiences working on the product team at Fabric and explains the various use cases for the Fabric service. Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data management Data lakes are notoriously complex. For data engineers who battle to build and scale high quality data workflows on the data lake, Starburst is an end-to-end data lakehouse platform built on Trino, the query engine Apache Iceberg was designed for, with complete support for all table formats including Apache Iceberg, Hive, and Delta Lake. Trusted by teams of all sizes, including Comcast and Doordash. Want to see Starburst in action? Go to dataengineeringpodcast.com/starburst (https://www.dataengineeringpodcast.com/starburst) and get $500 in credits to try Starburst Galaxy today, the easiest and fastest way to get started using Trino. Your host is Tobias Macey and today I'm interviewing Dipti Borkar about her work on Microsoft Fabric and performing analytics on data withou Interview Introduction How did you get involved in the area of data management? Can you describe what Microsoft Fabric is and the story behind it? Data lakes in various forms have been gaining significant popularity as a unified interface to an organization's analytics. What are the motivating factors that you see for that trend? Microsoft has been investing heavily in open source in recent years, and the Fabric platform relies on several open components. What are the benefits of layering on top of existing technologies rather than building a fully custom solution? What are the elements of Fabric that were engineered specifically for the service? What are the most interesting/complicated integration challenges? How has your prior experience with Ahana and Presto informed your current work at Microsoft? AI plays a substantial role in the product. What are the benefits of embedding Copilot into the data engine? What are the challenges in terms of safety and reliability? What are the most interesting, innovative, or unexpected ways that you have seen the Fabric platform used? What are the most interesting, unexpected, or challenging lessons that you have learned while working on data lakes generally, and Fabric specifically? When is Fabric the wrong choice? What do you have planned for the future of data lake analytics? Contact Info LinkedIn (https://www.linkedin.com/in/diptiborkar/) Parting Question From your perspective, what is the biggest gap in the tooling or technology for data management today? Closing Announcements Thank you for listening! Don't forget to check out our other shows. Podcast.__init__ (https://www.pythonpodcast.com) covers the Python language, its community, and the innovative ways it is being used. The Machine Learning Podcast (https://www.themachinelearningpodcast.com) helps you go from idea to production with machine learning. Visit the site (https://www.dataengineeringpodcast.com) to subscribe to the show, sign up for the mailing list, and read the show notes. If you've learned something or tried out a project from the show then tell us about it! Email hosts@dataengineeringpodcast.com (mailto:hosts@dataengineeringpodcast.com) with your story. Links Microsoft Fabric (https://www.microsoft.com/microsoft-fabric) Ahana episode (https://www.dataengineeringpodcast.com/ahana-presto-cloud-data-lake-episode-217) DB2 Distributed (https://www.ibm.com/docs/en/db2/11.5?topic=managers-designing-distributed-databases) Spark (https://spark.apache.org/) Presto (https://prestodb.io/) Azure Data (https://azure.microsoft.com/en-us/products#analytics) MAD Landscape (https://mattturck.com/mad2024/) Podcast Episode (https://www.dataengineeringpodcast.com/mad-landscape-2023-data-infrastructure-episode-369) ML Podcast Episode (https://www.themachinelearningpodcast.com/mad-landscape-2023-ml-ai-episode-21) Tableau (https://www.tableau.com/) dbt (https://www.getdbt.com/) Medallion Architecture (https://dataengineering.wiki/Concepts/Medallion+Architecture) Microsoft Onelake (https://learn.microsoft.com/fabric/onelake/onelake-overview) ORC (https://orc.apache.org/) Parquet (https://parquet.incubator.apache.org) Avro (https://avro.apache.org/) Delta Lake (https://delta.io/) Iceberg (https://iceberg.apache.org/) Podcast Episode (https://www.dataengineeringpodcast.com/iceberg-with-ryan-blue-episode-52/) Hudi (https://hudi.apache.org/) Podcast Episode (https://www.dataengineeringpodcast.com/hudi-streaming-data-lake-episode-209) Hadoop (https://hadoop.apache.org/) PowerBI (https://www.microsoft.com/power-platform/products/power-bi) Podcast Episode (https://www.dataengineeringpodcast.com/power-bi-business-intelligence-episode-154) Velox (https://velox-lib.io/) Gluten (https://gluten.apache.org/) Apache XTable (https://xtable.apache.org/) GraphQL (https://graphql.org/) Formula 1 (https://www.formula1.com/) McLaren (https://www.mclaren.com/) The intro and outro music is from The Hug (http://freemusicarchive.org/music/The_Freak_Fandango_Orchestra/Love_death_and_a_drunken_monkey/04_-_The_Hug) by The Freak Fandango Orchestra (http://freemusicarchive.org/music/The_Freak_Fandango_Orchestra/) / CC BY-SA (http://creativecommons.org/licenses/by-sa/3.0/)

EP 414 - Temporal Technologies Fmr. COO on Lessons From Scaling Multiple Product Businesses

What lessons can product leaders learn from scaling multiple product businesses? In this episode of Product Talk hosted by Sid Shaik, Temporal Technologies Fmr. COO Charles Zedlewski speaks on his career in product management and lessons learned from scaling multiple product businesses. Charles shares insights on becoming a successful PM without a technical background, emphasizing the importance of building technical depth over time. He discusses philosophies for collaborating closely with engineering teams and getting the "soul of the product" to live in their hearts. Charles also talks about his experience scaling Cloudera's Hadoop distribution and lessons from launching new features. Overall, the discussion provides perspectives on product growth strategies, frameworks for conceptualizing customer journeys, and approaches to partnerships between organizations.

081 | ČSOB – Tomáš Stegura, Executive Director & Roman Mašek, Director of Digital Services

S Tomášem a Romanem jsme si povídali o ČSOB, tedy bance, která v Česku spravuje vůbec největší objem peněz ze všech, nabízí služby firemní i retailové klientele a letos oslaví už 60. výročí. Probrali jsme hlavně digitální kanály, od virtuální asistentky Kate přes internetové až po mobilní bankovnictví, ale taky umělou inteligenci, machine learning a související starosti s bezpečností. Kluci totiž musí řešit i to, že se klienti stávají obětí stále sofistikovanějších podvodů

Your Data Can Do More... Find out how with Okera Data... The Story of Big Data doing Big Things | 016

016: Okera Data Management | Amandeep Khurana is CEO & Co-Founder of Cerebro Data. His company works with cloud native data management and governance software for enterprises. Before this he was the Principal Solutions Architect at Cloudera Inc, where he worked with Cloudera's customers to help them with their adoption and usage of the Hadoop ecosystem. *** For Show Notes, Key Points, Contact Info, & Resources Mentioned on this episode visit here: Amandeep Khurana Interview. *** Ready to part ways with your land in Florida? Visit our Sell My Land Florida webpage and discover a straightforward way to say goodbye to your property!

"Discovery Discoveries" with Alicia Brindisi and Bri LaVorgna

In Elixir Wizards Office Hours Episode 2, "Discovery Discoveries," SmartLogic's Project Manager Alicia Brindisi and VP of Delivery Bri LaVorgna join Elixir Wizards Sundi Myint and Owen Bickford on an exploratory journey through the discovery phase of the software development lifecycle. This episode highlights how collaboration and communication transform the client-project team dynamic into a customized expedition. The goal of discovery is to reveal clear business goals, understand the end user, pinpoint key project objectives, and meticulously document the path forward in a Product Requirements Document (PRD). The discussion emphasizes the importance of fostering transparency, trust, and open communication. Through a mutual exchange of ideas, we are able to create the most tailored, efficient solutions that meet the client's current goals and their vision for the future. Key topics discussed in this episode: Mastering the art of tailored, collaborative discovery Navigating business landscapes and user experiences with empathy Sculpting project objectives and architectural blueprints Continuously capturing discoveries and refining documentation Striking the perfect balance between flexibility and structured processes Steering clear of scope creep while managing expectations Tapping into collective wisdom for ongoing discovery Building and sustaining a foundation of trust and transparency Links mentioned in this episode: https://smartlogic.io/ Follow SmartLogic on social media: https://twitter.com/smartlogic Contact Bri: bri@smartlogic.io What is a PRD? https://en.wikipedia.org/wiki/Productrequirementsdocument Special Guests: Alicia Brindisi and Bri LaVorgna.

Adjacent and Between: Demystifying Digital Transformation with Power Apps and Power Automate